Neu in .NET 8.0 [32]: Weitere Neuerungen in System.Text.Json 8.0

dsregcmd, WAM and 'Connected to Windows'-Accounts

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

I was researching if it is possible to have a “real” single-sign-on experience with Azure AD/Entra ID and third-party desktop applications and I stumbled across a few things during my trip.

“Real” SSO?

There are a bunch of definitions out there about SSO. Most of the time, SSO just means: You can use the same account in different applications.

But some argue that a “real” SSO experience should mean: You log in to your Windows Desktop environment, and that’s it - each application should just use the existing Windows account.

Problems

With “Integrated Windows Auth,” this was quite easy, but with Entra ID, it seems really hard. Even Microsoft seems to struggle with this task, because even Microsoft Teams and Office need at least a hint like an email address to sign in the actual user.

Solution?

I _didn’t__ found a solution for this (complex) problem, but I found a few interesting tools/links that might help achieve it.

Please let me know if you find a solution 😉

“dsregcmd”

There is a tool called dsregcmd, which stands for “Directory Service Registration” and shows how your device is connected to Azure AD.

PS C:\Users\muehsig> dsregcmd /?

DSREGCMD switches

/? : Displays the help message for DSREGCMD

/status : Displays the device join status

/status_old : Displays the device join status in old format

/join : Schedules and monitors the Autojoin task to Hybrid Join the device

/leave : Performs Hybrid Unjoin

/debug : Displays debug messages

/refreshprt : Refreshes PRT in the CloudAP cache

/refreshp2pcerts : Refreshes P2P certificates

/cleanupaccounts : Deletes all WAM accounts

/listaccounts : Lists all WAM accounts

/UpdateDevice : Update device attributes to Azure ADIn Windows 11 - as far as I know - a new command was implemented: /listaccounts

dsregcmd /listaccounts

This command lists all “WAM” accounts from my current profile:

The ...xxxx... is used to hide information

PS C:\Users\muehsig> dsregcmd /listaccounts

Call ListAccounts to list WAM accounts from the current user profile.

User accounts:

Account: u:a17axxxx-xxxx-xxxx-xxxx-1caa2b93xxxx.85c7xxxx-xxxx-xxxx-xxxx-34dc6b33xxxx, user: xxxx.xxxx@xxxx.com, authority: https://login.microsoftonline.com/85c7xxxx-xxxx-xxxx-xxxx-34dc6b33xxxx.

Accounts found: 1.

Application accounts:

Accounts found: 0.

Default account: u:a17axxxx-xxxx-xxxx-xxxx-1caa2b93xxxx.85c7xxxx-xxxx-xxxx-xxxx-34dc6b33xxxx, user: xxxx.xxxx@xxxx.com.What is WAM?

It’s not the cool x-mas band with the fancy song (that we all love!).

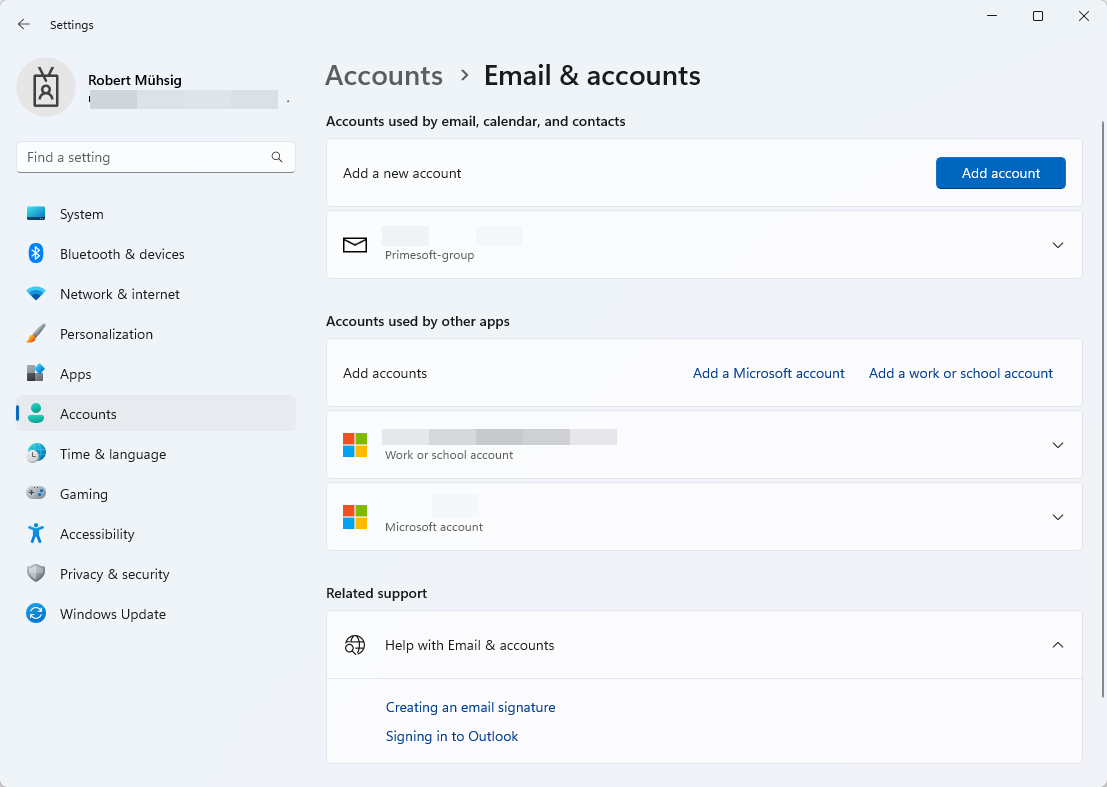

WAM stands for Web Account Manager and it integrates with the Windows Email & accounts setting:

WAM can also be used to obtain a Token - which might be the right direction for my SSO question, but I couldn’t find the time to test this out.

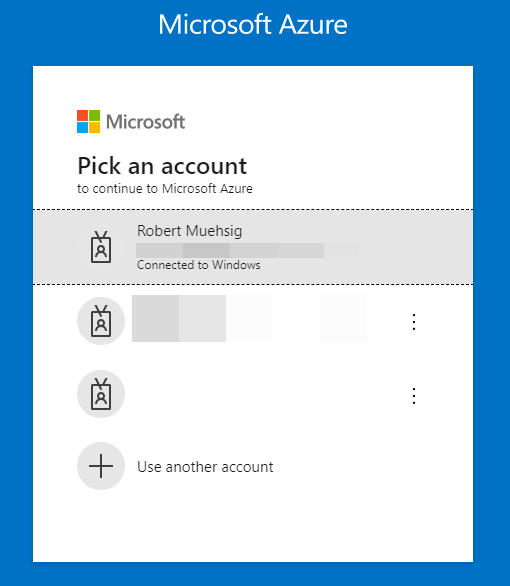

“Connected to Windows”

This is now pure speculation, because I couldn’t find any information about it, but I think the “Connected to Windows” hint here:

… is based on the Email & accounts setting (= WAM), and with dsregcmd /listaccounts I can see diagnostic information about it.

“Seamless single sign-on”

I found this troubleshooting guide and it seems that there is a thing called “seamless single sign-on”, but I’m not 100% sure if this is more a “Development” topic or “IT-Pro” topic (or a mix of both).

TIL

I (and you!) have learned about a tool called dsregcmd.

Try out the dsregcmd /status, it’s like ipconfig /all, but for information about AD connectivity.

WAM plays an important part with the “Email & accounts” setting and maybe this is the right direction for the actual SSO topic.

Open questions…

Some open questions:

- Why does

dsregcmd /listAccountsonly list one account when I have two accounts attached under the “WAM” (see screenshot - a Azure AD account AND an Microsoft account)? - Where does “Connected to Windows” come from? How does the browser know this?

- What is “seamless single-sign-on”?

Hope this helps!

Neu in .NET 8.0 [33]: Erweiterung des AOT-Compilers

Neu in .NET 8.0 [34]: Verbesserte Ausgaben beim Kompilieren

UrlEncode the Space Character

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

This might seem trivial, but last week I noticed that the HttpUtility.UrlEncode(string) encodes a space ` ` into +, whereas the JavaScript encodeURI(string) method encodes a space as %20.

This brings up the question:

Why?

It seems that in the early specifications, a space was encoded into a +, see this Wikipedia entry:

When data that has been entered into HTML forms is submitted, the form field names and values are encoded and sent to the server in an HTTP request message using method GET or POST, or, historically, via email.[3] The encoding used by default is based on an early version of the general URI percent-encoding rules,[4] with a number of modifications such as newline normalization and replacing spaces with + instead of %20. The media type of data encoded this way is application/x-www-form-urlencoded, and it is currently defined in the HTML and XForms specifications. In addition, the CGI specification contains rules for how web servers decode data of this type and make it available to applications.

This convention has persisted to this day. For instance, when you search something on Google or Bing with a space in the query, the space is encoded as a +.

There seems to be some rules however, e.g. it is only “allowed” in the query string or as form parameters.

I found the question & answers on StackOverflow quite informative, and this answer summarizes it well enough for me:

| standard | + | %20 |

|---------------+-----+-----|

| URL | no | yes |

| query string | yes | yes |

| form params | yes | no |

| mailto query | no | yes |What about .NET?

If you want to always encode spaces as %20, use the UrlPathEncode method, see here.

You can encode a URL using with the UrlEncode method or the UrlPathEncode method. However, the methods return different results. The UrlEncode method converts each space character to a plus character (+). The UrlPathEncode method converts each space character into the string “%20”, which represents a space in hexadecimal notation. Use the UrlPathEncode method when you encode the path portion of a URL in order to guarantee a consistent decoded URL, regardless of which platform or browser performs the decoding.

Hope this helps!

Neu in .NET 8.0 [35]: Sicherheitswarnungen vor NuGet-Paketen

Neu in .NET 8.0 [36]: Andere Grundeinstellung bei dotnet publish und dotnet pack

Connection Resiliency for Entity Framework Core and SqlClient

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

If you work with SQL Azure you might find this familiar:

Unexpected exception occurred: An exception has been raised that is likely due to a transient failure. Consider enabling transient error resiliency by adding ‘EnableRetryOnFailure’ to the ‘UseSqlServer’ call.

EF Core Resiliency

The above error already shows a very simple attempt to “stabilize” your application. If you are using Entity Framework Core, this could look like this:

protected override void OnConfiguring(DbContextOptionsBuilder optionsBuilder)

{

optionsBuilder

.UseSqlServer(

@"Server=(localdb)\mssqllocaldb;Database=EFMiscellanous.ConnectionResiliency;Trusted_Connection=True;ConnectRetryCount=0",

options => options.EnableRetryOnFailure());

}The EnableRetryOnFailure-Method has a couple of options, like a retry count or the retry delay.

If you don’t use the UseSqlServer-method to configure your context, there are other ways to enable this behavior: See Microsoft Docs

Microsoft.Data.SqlClient - Retry Provider

If you use the “plain” Microsoft.Data.SqlClient NuGet Package to connect to your database have a look at Retry Logic Providers

A basic implementation would look like this:

// Define the retry logic parameters

var options = new SqlRetryLogicOption()

{

// Tries 5 times before throwing an exception

NumberOfTries = 5,

// Preferred gap time to delay before retry

DeltaTime = TimeSpan.FromSeconds(1),

// Maximum gap time for each delay time before retry

MaxTimeInterval = TimeSpan.FromSeconds(20)

};

// Create a retry logic provider

SqlRetryLogicBaseProvider provider = SqlConfigurableRetryFactory.CreateExponentialRetryProvider(options);

// Assumes that connection is a valid SqlConnection object

// Set the retry logic provider on the connection instance

connection.RetryLogicProvider = provider;

// Establishing the connection will retry if a transient failure occurs.

connection.Open();

You can set a RetryLogicProvider on a Connection and on a SqlCommand.

Some more links and tips

These two options seem to be the “low-level-entry-points”. Of course could you wrap each action with a library like Polly.

During my research I found a good overview: Implementing Resilient Applications.

Hope this helps!

Neu in .NET 8.0 [37]: Zusammenfassung aller Build-Artefakte in ein Verzeichnis

Entity Framework Core 8.0 Breaking Changes & SQL Compatibility Level

We recently switched from .NET 6 to .NET 8 and encountered the following Entity Framework Core error:

Microsoft.Data.SqlClient.SqlException: 'Incorrect syntax near the keyword 'WITH'....The EF code uses the Contains method as shown below:

var names = new[] { "Blog1", "Blog2" };

var blogs = await context.Blogs

.Where(b => names.Contains(b.Name))

.ToArrayAsync();Before .NET 8 this would result in the following Sql statement:

SELECT [b].[Id], [b].[Name]

FROM [Blogs] AS [b]

WHERE [b].[Name] IN (N'Blog1', N'Blog2')… and with .NET 8 it uses the OPENJSON function, which is not supported on older versions like SQL Server 2014 or if the compatibility level is below 130 (!)

- See this blogpost for more information about the

OPENJSONchange.

The fix is “simple”

Ensure you’re not using an unsupported SQL version and that the Compatibility Level is at least on level 130.

If you can’t change the system, then you could also enforce the “old” behavior with a setting like this (not recommended, because it is slower!)

...

.UseSqlServer(@"<CONNECTION STRING>", o => o.UseCompatibilityLevel(120));How to make sure your database is on Compatibility Level 130?

Run this statement to check the compatibility level:

SELECT name, compatibility_level FROM sys.databases;We updated our test/dev SQL Server and then moved all databases to the latest version with this SQL statement:

DECLARE @DBName NVARCHAR(255)

DECLARE @SQL NVARCHAR(MAX)

-- Cursor to loop through all databases

DECLARE db_cursor CURSOR FOR

SELECT name

FROM sys.databases

WHERE name NOT IN ('master', 'tempdb', 'model', 'msdb') -- Exclude system databases

OPEN db_cursor

FETCH NEXT FROM db_cursor INTO @DBName

WHILE @@FETCH_STATUS = 0

BEGIN

-- Construct the ALTER DATABASE command

SET @SQL = 'ALTER DATABASE [' + @DBName + '] SET COMPATIBILITY_LEVEL = 150;'

EXEC sp_executesql @SQL

FETCH NEXT FROM db_cursor INTO @DBName

END

CLOSE db_cursor

DEALLOCATE db_cursor

Check EF Core Breaking Changes

There are other breaking changes, but only the first one affected us: Breaking Changes

Hope this helps!

Entwickler-Community-Konferenz am 27. und 28.09.2024 in Essen

Nur Mut! – Endlich raus aus der Scrum-Hölle

Neu in .NET 8.0 [38]: Containerdateien per dotnet publish erstellen

OpenAI API, LM Studio and Ollama

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

I had the opportunity to attend the .NET User Group Dresden at the beginning of September for the exciting topic “Using Open Source LLMs” and learned a couple of things.

How to choose an LLM?

There are tons of LLMs (= Large Language Models) that can be used, but which one should we choose? There is no general answer to that - of course - but there is a Chatbot Arena Leaderboard, which measures the “cleverness” between those models. Be aware of the license of each model.

There is also a HuggingChat, where you can pick some models and experiment with them.

For your first steps on your local hardware: Phi3 does a good job and is not a huge model.

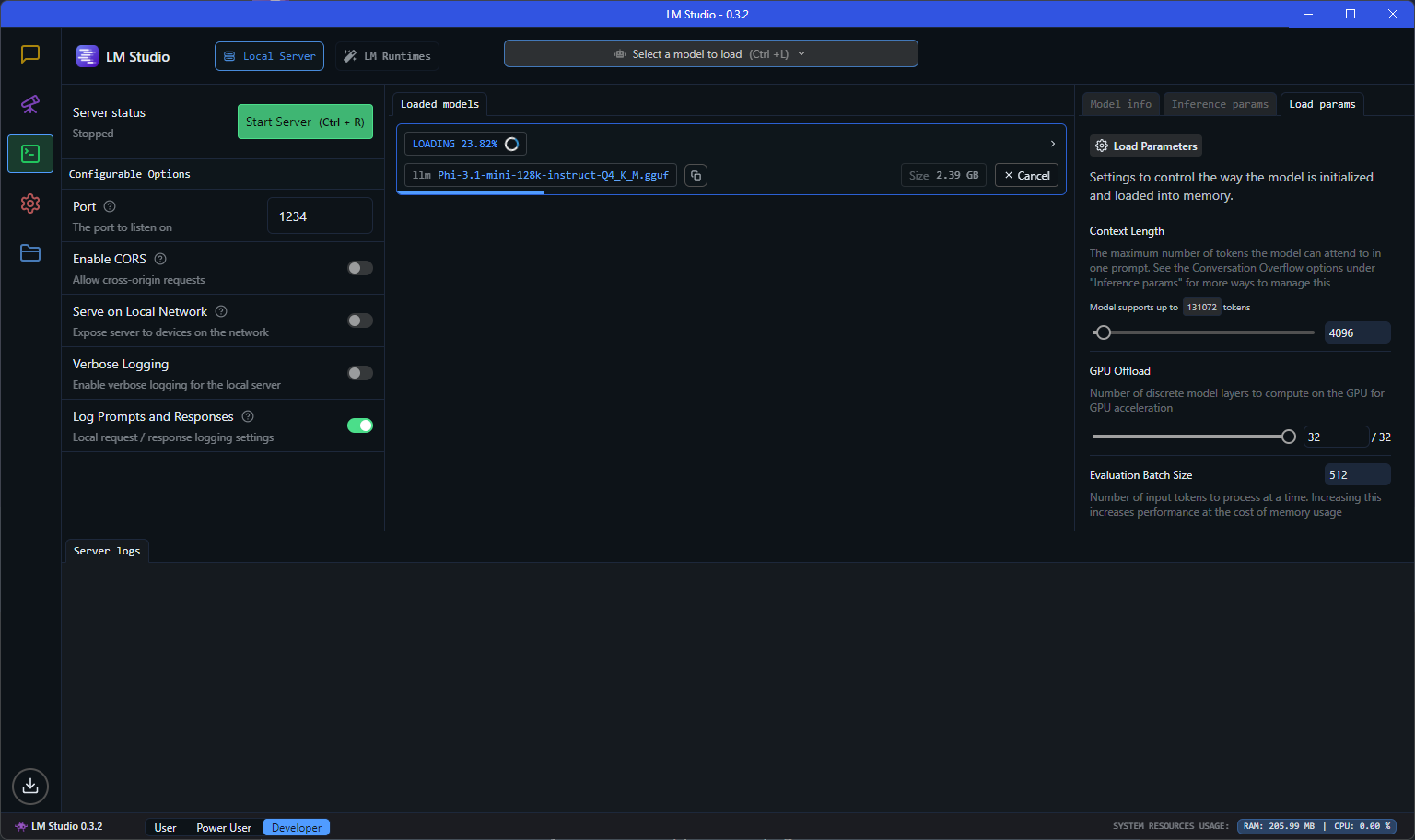

LM Studio

Ok, you have a model and an idea, but how to play with it on your local machine?

The best tool for such a job is: LM Studio.

The most interesting part was (and this was “new” to me), that you run those local models in an local, OpenAI compatible (!!!) server.

OpenAI Compatible server?!

If you want to experiment with a lightweight model on your system and interact with it, then it is super handy, if you can use the standard OpenAI client and just run against your local “OpenAI”-like server.

Just start the server, use the localhost endpoint and you can use a code like this:

using OpenAI.Chat;

using System.ClientModel;

ChatClient client = new(model: "model", "key",

new OpenAI.OpenAIClientOptions()

{ Endpoint = new Uri("http://localhost:1234/v1") });

ChatCompletion chatCompletion = client.CompleteChat(

[

new UserChatMessage("Say 'this is a test.'"),

]);

Console.WriteLine(chatCompletion.Content[0].Text);

The model and the key don’t seem to matter that much (or at least I worked on my machine). The localhost:1234 service is hosted by LM Studio on my machine. The actual model can be configured in LM Studio and there is a huge choice available.

Even streaming is supported:

AsyncCollectionResult<StreamingChatCompletionUpdate> updates

= client.CompleteChatStreamingAsync("Write a short story about a pirate.");

Console.WriteLine($"[ASSISTANT]:");

await foreach (StreamingChatCompletionUpdate update in updates)

{

foreach (ChatMessageContentPart updatePart in update.ContentUpdate)

{

Console.Write(updatePart.Text);

}

}

Ollama

The obvious next question is: How can I run my own LLM on my own server? LM Studio works fine, but it’s just a development tool.

One answer could be: Ollama, which can run large language models and has a compatibility to the OpenAI API.

Is there an Ollama for .NET devs?

Ollama looks cool, but I was hoping to find an “OpenAI compatible .NET facade”. I already played with LLamaSharp, but LLamaSharpdoesn’t offer currently a WebApi, but there are some ideas around.

My friend Gregor Biswanger released OllamaApiFacade, which looks promising, but at least it doesn’t offer a real OpenAI compatible .NET facade, but maybe this will be added in the future.

Acknowledgment

Thanks to the .NET User Group for hosting the meetup, and a special thanks to my good friend Oliver Guhr, who was also the speaker!

Hope this helps!

Eine Frage des Vertrauens: Finger weg vom Homeoffice!

Mehr als nur Programmieren: Ankündigung der tech:lounge Masterclass

Die Einführung des EU Accessibility Act? Das wird teuer!

Neu in .NET 8.0 [40]: Eigener Workload für WASI

Ende der .NET-8.0-Serie – NET 9.0 kommt bald

75 Prozent aller Softwareprojekte scheitern – was tun?

JavaScript-Runtime Deno 2.0: Ist die neue Version das bessere Node.js?!

Buchtipp: Neuronale Netze selbst programmieren

Fachbücher zu .NET 9.0, C# 13.0, Entity Framework Core 9.0 und Blazor 9.0

Microsoft Defender Performance Analyzer

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

A customer notified us that our product was slowing down their Microsoft Office installation at startup. Everything on our side seemed fine, but sometimes Office took 10–15 seconds to start.

After some research, I stumbled upon this: Performance analyzer for Microsoft Defender Antivirus.

How to run the Performance Analyzer

The best part about this application is how easy it is to use (as long as you have a prompt with admin privileges). Simply run this PowerShell command:

New-MpPerformanceRecording -RecordTo recording.etlThis will start the recording session. After that, launch the program you want to analyze (e.g., Microsoft Office). When you’re done, press Enter to stop the recording.

The generated recording.etl file can be complex to read and understand. However, there’s another command to extract the “Top X” scans, which makes the data way more readable.

Use this command to generate a CSV file containing the top 1,000 files scanned by Defender during that time:

(Get-MpPerformanceReport -Path .\recording.etl -Topscans 1000).TopScans | Export-CSV -Path .\recording.csv -Encoding UTF8 -NoTypeInformationUsing this tool, we discovered that Microsoft Defender was scanning all our assemblies, which was causing Office to start so slowly.

Now you know: If you ever suspect that Microsoft Defender is slowing down your application, just check the logs.

Note: After this discovery, the customer adjusted their Defender settings, and everything worked as expected.

Hope this helps!

DDD, the Natural Evolution of the IT Industry

Schulungsangebote vor Ort und virtuell: tech:lounge Live! und tech:lounge 360°

Low-Code & Co.: Mehr Schaden als Nutzen?

Architektur ist überbewertet – und was wir daraus lernen können

Neu in .NET 9.0 [1]: Start der neuen Blogserie

Neu in .NET 9.0 [2]: Support für .NET 9.0

Neu in .NET 9.0 [3]: Features in der Programmiersprache C# 13.0

WinINet, WinHTTP and the HttpClient from .NET

This is more of a “Today-I-Learned” post and not a “full-blown How-To article.” If something is completely wrong, please let me know - thanks!

A customer inquiry brought the topic of “WinINet” and “WinHTTP” to my attention. This blog post is about finding out what this is all about and how and whether or not these components are related to the HttpClient of the .NET Framework or .NET Core.

General

Both WinINet and WinHTTP are APIs for communication via the HTTP/HTTPS protocol and Windows components. A detailed comparison page can be found here.

WinINet

WinINet is intended in particular for client applications (such as a browser or other applications that communicate via HTTP). In addition to pure HTTP communication, WinINet also has configuration options for proxies, cookie and cache management.

However, WinINet is not intended for building server-side applications or very scalable applications.

WinHTTP

WinHTTP is responsible for the last use case, which even runs a “kernel module” and is therefore much more performant.

.NET HttpClient

At first glance, it sounds as if the HttpClient should access WinINet from the .NET Framework or .NET Core (or .NET 5, 6, 7, …) - but this is not the case.

Instead:

The .NET Framework relies on WinHTTP. Until .NET Core 2.1, the substructure was also based on WinHTTP.

Since .NET Core 2.1, however, a platform-independent “SocketsHttpHandler” works under the HttpClient by default. However, the HttpClient can partially read the Proxy settings from the WinINet world.

The “WinHttpHandler” is still available as an option, although the use case for this is unknown to me.

During my research, I noticed this GitHub issue. This issue is about the new SocketsHttpHandler implementation not being able to access the same WinINet features for cache management. The topic is rather theoretical and the issue is already several years old.

Summary

What have we learned now? Microsoft has implemented several Http stacks and in “modern” .NET the HttpClient uses its own handler.

Hope this helps!

Neu in .NET 9.0 [4]: Partielle Properties und partielle Indexer in C# 13.0

Weniger ist mehr: Was guten Code (und gute Architektur) kaputtmacht

Wie niedlich: Du programmierst ernsthaft in dieser Programmiersprache?

Neu in .NET 9.0 [6]: Neues Escape-Zeichen für Konsolenausgaben

Event Sourcing: Die bessere Art zu entwickeln?

Azure Resource Groups are not just dumb folders

General

An Azure Resource Group is more or less one of the first things you need to create under your Azure subscription because most services need to be placed in an Azure Resource Group.

A resource group has a name and a region, and it feels just like a “folder,” but it’s (sadly) more complicated, and I want to showcase this with App Service Plans.

What is an App Service Plan?

If you run a Website/Web Service/Web API on Azure, one option would be Web Apps-Service.

If you are a traditional IIS developer, the “Web Apps-Service” is somewhat like a “Web Site” in IIS.

When you create a brand-new “Web Apps-Service,” you will need to create an “App Service Plan” as well.

The “App Service Plan” is the system that hosts your “Web App-Service.” The “App Service Plan” is also what actually costs you money, and you can host multiple “Web App-Services” under one “App Service Plan.”

All services need to be created in a resource group.

Recap

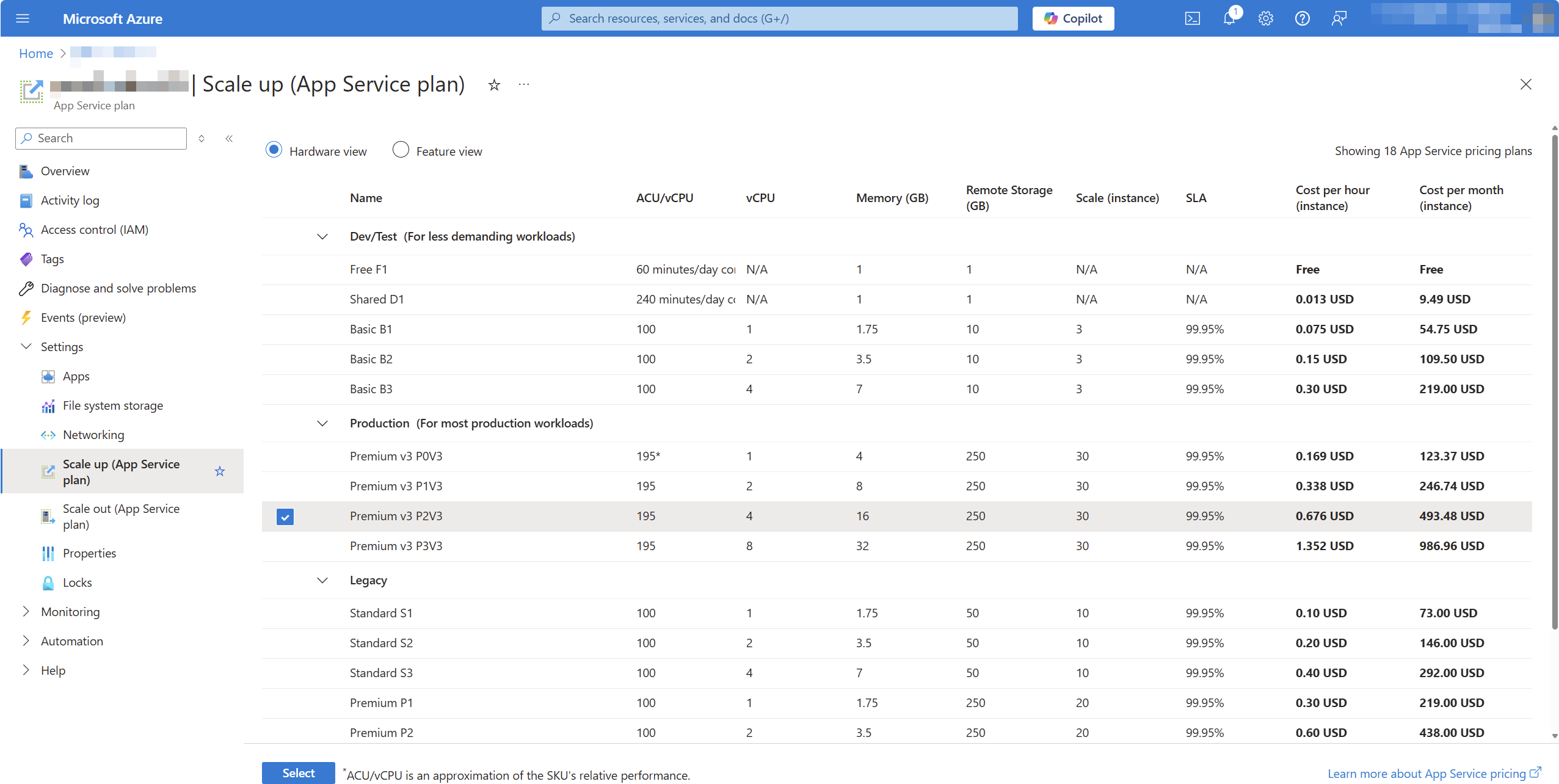

An “App Service Plan” can host multiple “Web App-Services.” The price is related to the instance count and the actual plan.

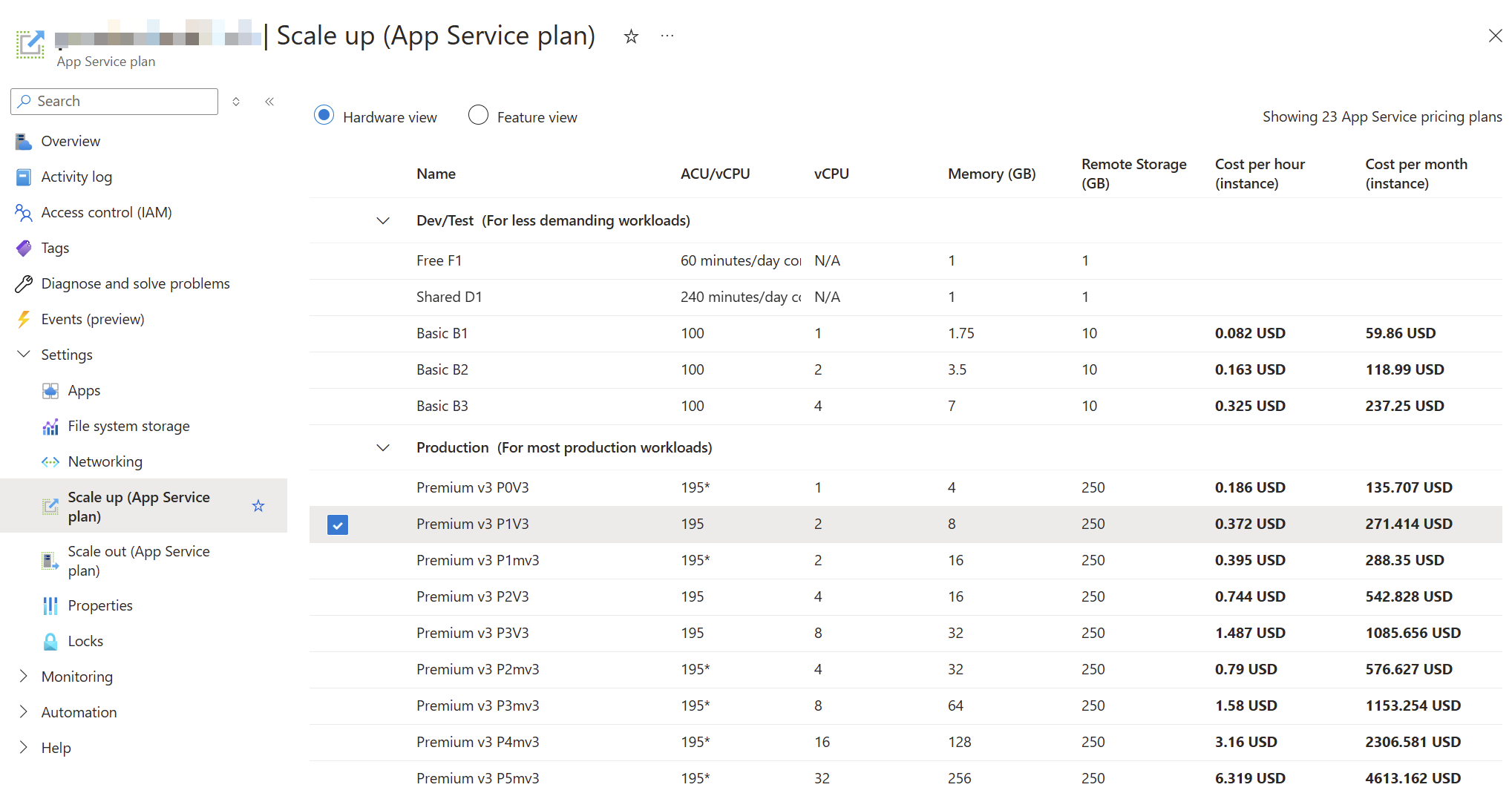

Here is a screenshot from one of our app plans:

So far, so good, right?

A few months later, we created another resource group in a different region with a new app plan and discovered that there were more plans to choose from:

Especially those memory-optimized plans (“P1mV3” etc.) are interesting for our product.

The problem

So we have two different “App Service Plans” in different resource groups, and one App Service Plan did not show the option for the memory-optimized plans.

This raises a simple question: Why and is there an easy way to fix it?

Things that won’t work

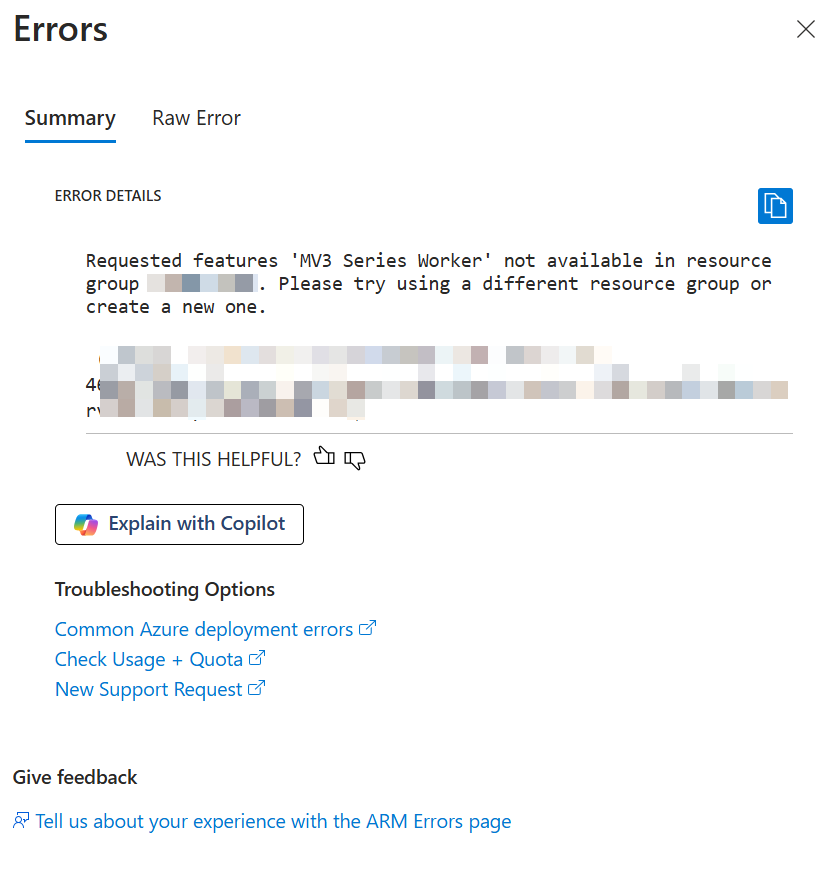

First, I created a new“App Service Plan” within the same resource group as the “old” “App Service Plan,” but this operation failed:

Then I tried to just move the existing “App Service Plan” to a new resource group, but even then, I could not change the SKU to the memory-optimized plan.

The “reason” & solution

After some frustration - since we had existing services and wanted to maintain our structure - I found this documentation site.

Scale up from an unsupported resource group and region combination

If your app runs in an App Service deployment where Premium V3 isn’t available, or if your app runs in a region that currently does not support Premium V3, you need to re-deploy your app to take advantage of Premium V3. Alternatively newer Premium V3 SKUs may not be available, in which case you also need to re-deploy your app to take advantage of newer SKUs within Premium V3. …

It seems the behavior is “as designed,” but I would say that the design is a hassle.

The documentation points out two options for this, but in the end, we will need to create a new app plan and recreate all “Web App-Services” in a new resource group.

Lessons learned?

At first glance, I thought that “resource groups” acted like folders, but underneath—depending on the region, subscription, and existing services within that resource group—some options might not be available.

Bummer, but hey… at least we learned something.